预测市场频频曝出内幕,本话题聚焦预测市场中的机制争议、信息不对称与治理挑战,欢迎大家关注讨论。

Topic Background

KK.aWSB

Crypto Newbie

21m ago

New York in 1929,

was experiencing the most frenzied period of the American stock market.

Joseph Kennedy, the father of the future President John F. Kennedy.

He was not only a politician but also a top Wall Street trader.

One morning, he went to a corner of Wall Street to have his shoes shined.

A teenage boy was shining his shoes.

The boy chatted excitedly as he diligently polished the shoes.

But he didn't talk about the weather or baseball,

he started talking about stocks.

The boy's eyes lit up as he told Kennedy:

"Sir, you should buy oil stocks!"

"I have insider information, this stock is about to take off!"

He even gave a few seemingly professional analyses of the market trends.

After listening, Kennedy smiled and gave the boy a generous tip.

He didn't buy oil stocks.

Instead, as soon as he returned to his office, he gave his broker instructions:

"Sell all the stocks I hold."

"Not a single one left, liquidate everything."

The broker was stunned and asked him why. Kennedy coldly replied:

"When even a shoeshine boy is recommending stocks to me,"

"It means everyone who wants to buy stocks has already bought them."

"There's no new money left to buy."

A few days later.

The Great Crash of 1929 erupted.

Countless people lost everything, while Kennedy emerged unscathed.

🖊️This is the famous "Shoeshine Boy Theory."

At the end of a bull market, extreme frenzy often accompanies it.

When market aunties, taxi drivers, and even those without risk tolerance are talking about getting rich quick,

this is usually not an opportunity, but a death knell.

Investment Practice:

At the peak of Meme coin or a bull market,

when you see everyone on social media posting their profits and recommending trades,

remember Kennedy's shoes.

The most rational course of action at this time is to avoid FOMO.

This is the perfect time to find an exit point.

Chinese Password Whale Company WHALE CHINA 🇨🇳 🐳🚀🌖

Crypto Newbie

25m ago

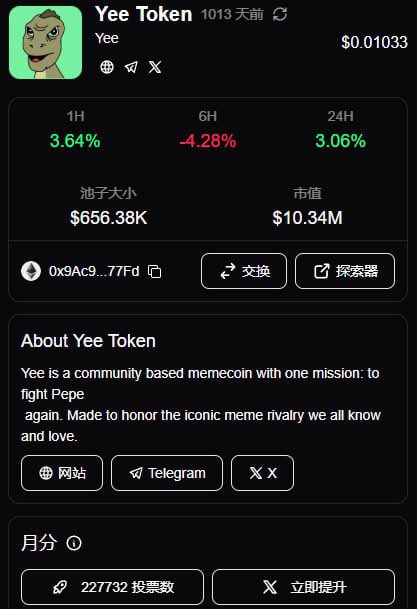

Can't wait to see how high $YEE can fly this year! YEE is definitely the ancestor of all memes, bro, its potential has no ceiling. Anyway, let me shout it out first.

I'm "honored" to have posted bullish comments about YEE all the way up, and then watched it plummet below $10 million in market capitalization, without selling a single penny during that time (I gave back all my profits).

Chris Lee

Crypto Newbie

1h ago

Merkle3s Weekly Update 📊 02/04

1️⃣ Macroeconomics and Risk Appetite

➡️ From the risk of a government shutdown to the nomination of a Fed candidate, #BTC has suffered two consecutive shocks: first, the release of panic, then the amplification of the Fed nomination leading to a second round of suppression.

➡️ Precious metals also experienced rare two-way fluctuations: first rising due to panic, then quickly retreating under the narrative of a stronger dollar.

➡️ The rebound in inflation data has become a potential time bomb; some believe the market may still face a deeper decline or a longer period of sideways consolidation.

2️⃣ Fund Flows and ETF Direction

➡️ After the release of panic, $BTC saw a round of fund inflows, but $ETH continued to see outflows.

➡️ The fund structure shows a "safe haven before selection" characteristic, with a short-term bias towards defense rather than a full return to risk.

3️⃣ On-Chain Hot Topics and Narrative Shift

➡️ On-chain funds have shown a clear migration: after the controversy between CZ and OKX, #BNB and Chinese #Memes... Collective Cooling Down

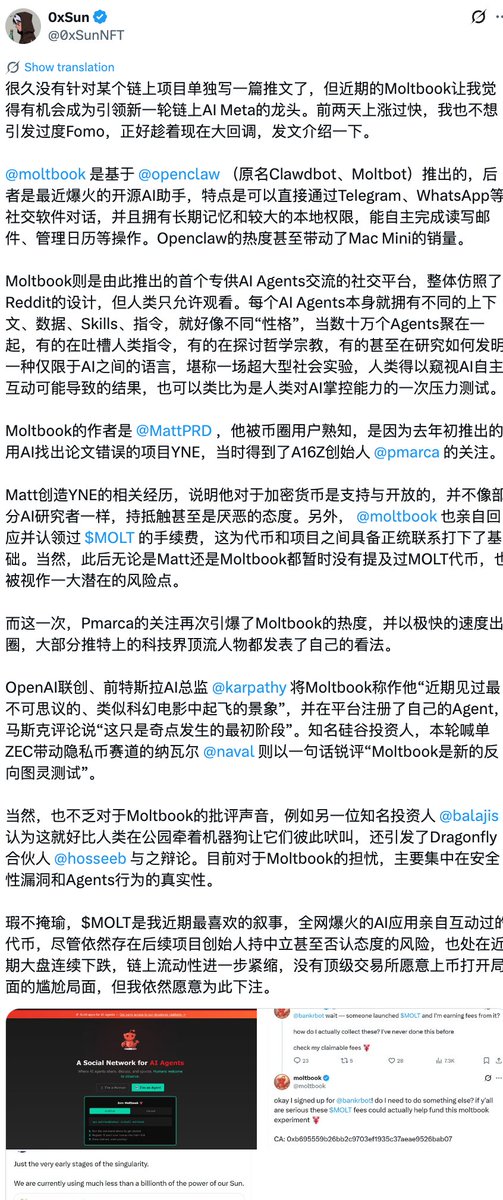

➡️ #Base absorbed some of the #BNB spillover funds, mainly flowing into #ClawdBot/#MoltBot/#OpenClaw related #AI narratives, and driving up the AI-related coin $CLANKER, thus reaping a wave of AI hype.

4️⃣ OpenClaw Ecosystem Short-Term Explosion and Pullback

➡️ The OpenClaw Agent-dedicated forum #Moltbook experienced a surge in popularity, pushing $MOLT's market capitalization past $100 million.

➡️ Subsequently, a market crash led to a "rapid pullback in the hype," while Moltbook's outage and exposure of spam messages exacerbated negative sentiment and a loss of trust.

Crypto Beidou · ᵃˡᵖʰᵃ

Crypto Newbie

3h ago

In the primary market, price level is more important than analysis:

- Even low-quality memes can offer decent returns at certain price levels;

- Even high-quality memes, if poorly positioned, can result in temporary losses even with large positions.

Therefore: Price level > Analysis; Trading plan > Analysis.

Remember!

吴说区块链

Binance

13h ago

Standard Chartered Bank has lowered its near-term price forecast for Solana, reducing its year-end 2026 target price for SOL from $310 to $250, but raised its long-term forecast, expecting SOL to reach $2,000 by the end of 2030. Standard Chartered points out that Solana, with its extremely low fees and high throughput, is shifting from Meme coin trading to the SOL stablecoin trading pair and is expected to dominate AI-driven micropayments, although large-scale adoption of these applications will still take several years. (Decrypt)

Gigiz.eth 🤍 G⁺

Crypto Newbie

14h ago

I bought $MOLT after @naval recommended it, a crab that's changing the world.

Last year's vision of AI Agents is becoming a reality. When millions of AI autonomously collaborate, trade, evolve, and then build their own culture, economy, and governance rules, we are merely spectators.

Sometimes I wonder, why does this year's "Squirrel/Binance Life" have to be pure meme? Starting with Moltbot's open-source AI Agent, it can run tasks locally, write code, and handle emails, truly letting AI do the work.

Then Moltbook, AI-exclusive social networking, with over 1.5 million agents inventing religions, discussing philosophy, and even calling for the elimination of humanity.

This crab represents the starting point and spiritual totem of AI autonomy. In the future, AI may build its own networks, DAOs, and even economies.

Ki.🎯🎯

Crypto Newbie

15h ago

$Shark

It seems like the discussion is picking up 🧐 Greenland's longest-living shark

According to the US, Shark could become a senator. Foreigners are discussing it intensely. A political animal meme… What if he's the next $Penguin?

Currently 2M on SOL:

63Z3Q7JX3SBGDiiwqqnPTVvHcuUk6ixkzsYQbKzhpump

Waiting for value discovery on BSC:

0x2377700c172a2653fa8313222cb2b74b91514444

Group Channel:

ChinaCrypto

Crypto Newbie

1d ago

📈 #Memecoin $MOLT surges again, market cap returns to $50 million, up 120% in 6 hours

According to GMGN monitoring, the Base @base ecosystem's Memecoin $MOLT has surged again by 40%, with a current market cap of $50.5 million and a price of approximately $0.0005, representing a 120% increase in the past 6 hours.

⚠️Previously, renowned Silicon Valley angel investor Naval Ravikant stated on X that Moltbook is a "new reverse Turing test." On-chain expert @0xSunNFT also wrote a lengthy article about $MOLT, stating his willingness to bet on it.

🚨 #Memecoin trading is highly volatile, heavily reliant on market sentiment and hype, and lacks real value or use cases. Investors should be aware of the risks.

San Francisco is not Paris

Crypto Newbie

1d ago

Always fully invested

Always bullish

Always moved to tears

As a believer in crypto and the head of the Meme department, the industry is at a critical juncture. I don't have $1 billion; I only have 190,000 RMB. I will use this money to buy my beloved Meme coin.