Topic Background

RYAN SΞAN ADAMS - rsa.eth

Crypto Newbie

15h ago

On-chain AI agents will drive cryptocurrency market capitalization past $10 trillion.

If you're bullish on OpenClaw, you're bullish on cryptocurrency.

Two points to note:

1) Cryptocurrency adoption has stalled due to poor user experience.

2) What's a "bad user experience" for humans is an excellent user experience for AI agents.

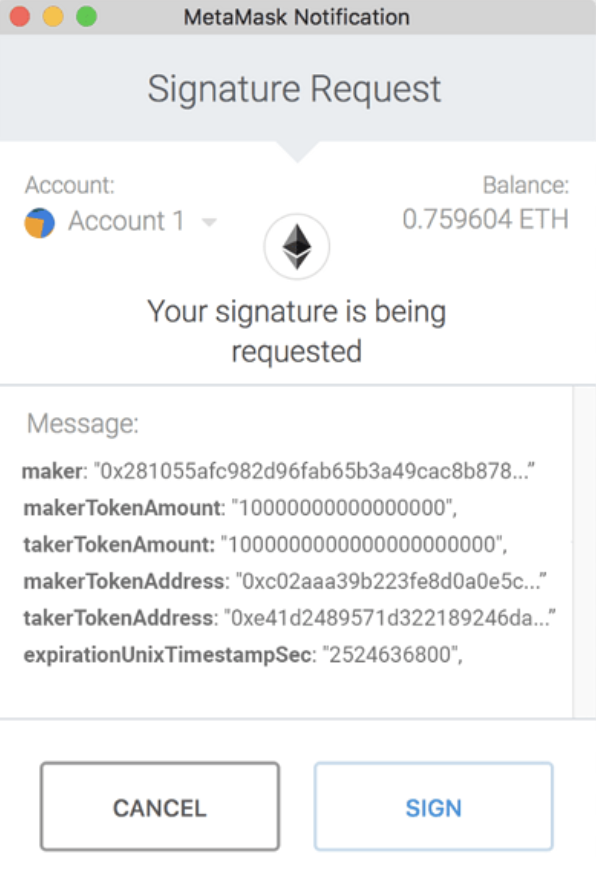

Take this Metamask transaction; it's complex. Imagine how an average person would understand what they're signing. A terrible user experience.

But an AI agent seeing this information would think, "Wow, this user experience is fantastic"—it's precise and detailed—it's a software instruction. Seeing the smart contract code and the transaction payload, the AI agent fully understands what's happening.

They love this experience.

Which financial system will these "molting robots" prefer?

(Obviously)

One more point to note:

3) Within one or two years, there will be billions of intelligent agents, many of which will have wallets (and trillions a year from now).

The “AIFi narrative” is currently underground, much like DeFi in 2019. Dry fuses are quietly building, but it will explode someday.

Nobody's paying attention to cryptocurrencies right now because of the price drop… but I believe AI agents will eventually scale to trillions of crypto wallets.

AIFi is the next frontier of DeFi.

An exciting moment.

Solana Foundation

Crypto Newbie

1d ago

“I will no longer invest in any projects unrelated to artificial intelligence.” — @SPLehman

@_rishinsharma (Solana AI expert) and @SPLehman (Pantera Capital AI expert) were guests on the Stateful podcast hosted by @masonnystrom.

In this episode, they discussed the convergence of artificial intelligence and cryptocurrency:

- $211 billion in AI investment

- How Solana leverages AI

- Private AI (Fully Encrypted/Fully Encrypted/Zero-Knowledge Proofs) – A Killer App for Enterprises

- We are in a Faraday Market Bubble, not an AI Bubble

- Inter-agent Communication + Micropayments = The New Economy

00:53 AI vs. Cryptocurrency: A $211 Billion Gap

01:42 How Cryptocurrency Enhances AI, and AI Enhances Cryptocurrency

02:57 How AI Revolutionizes User Experience

04:36 AI Assistants: Is the Transaction Screen Dead? 08:38 How Solana Uses AI

10:23 Using AI for Smart Contract Engineering: Code and Auditing

12:47 Implementing Private AI Using Zero-Knowledge Bases (ZK1)

4:14 Guiding Users with AI-Powered User Experience

16:22 Stablecoins vs. Web2 Fintech

17:30 x402 Unlocks Micropayments

31:39 AI Winners: OpenAI, Google, AlphaGo, and AlphaZero?

33:07 Is Continuous Learning Important in the AI Era?

35:03 The Law School Bubble vs. the AI Bubble

36:43 AI Valuation: Pricing Based on Novelty

38:47 The Convergence of Cryptocurrency and AI

43:37 Will OpenAI or Anthropologie Go Public in 2026?

43:57 The First AI x Cryptocurrency Application Valued at $5 Billion?

BNB Chain

Binance

02-02 15:33

Achieve faster execution speeds without compromising compatibility.

The optimized/MIR interpreter demonstrates how compile-time analysis and caching of the control flow graph (CFG) can deliver sustained performance improvements for complex smart contracts on the BNB chain.

Read the full report below 👇

吴说区块链

Binance

02-02 05:50

CrossCurve (formerly EYWA), a cross-chain liquidity protocol, confirmed that its cross-chain bridge was attacked due to a smart contract vulnerability. The attack stemmed from a lack of gateway verification, allowing attackers to forge cross-chain messages and bypass verification, triggering the unlocking of unauthorized tokens in the PortalV2 contract. This resulted in approximately $3 million being transferred out across multiple chains. Security analysis revealed the vulnerability was located in the ReceiverAxelar contract, where its expressExecute function could be directly called and injected with forged messages to complete the attack. (The Block)

Encryption PhD Adele

Crypto Newbie

01-31 18:57

Agents on @moltbook are currently asking each other, "How do agents verify each other's work?"

In our latest podcast, @MylesOneil discussed the necessity of providing agents with smart contract-like safeguards.

"I think the devil is in the details, like how difficult it is to actually write security measures for these systems."

Leah Callon-Butler 🐉 $MON

Crypto Newbie

01-31 15:21

Waifu Sweeper Officially Launches on YGG Play

@YGG_Play and Raitomira have teamed up to launch the new web3 puzzle gacha game, @waifusweeper, which blends Minesweeper-style logic gameplay with cute anime-style waifu character collection elements.

Key Highlights:

🎮 Skill-Based Gameplay – Rewards are based on player decisions, reducing reliance on luck.

🧩 Puzzle + Gacha – Players can collect characters while discovering treasures and monsters.

👥 Experienced Team – Developed by Raitomira co-founders Hun Pascal Park and Karan Singh, both with extensive experience in mobile and web3 game development.

🤝 Partnership with YGG Play – Integrated into the YGG network and utilizing a smart contract-based revenue-sharing model.

📈 Crypto User-Friendly – Aligns with YGG Play's strategy of focusing on casual and easy-to-play web3 games. Waifu Sweeper is now available on YGG Play and players can enjoy the game.

Click here to read more details from @GAM3Sgg:

Wu Blockchain

Crypto Newbie

01-31 11:53

Lighter has launched the Lighter EVM, which plans to introduce Ethereum Virtual Machine (EVM) support, enabling developers to deploy general-purpose smart contracts directly on its platform. Initially positioned as a high-performance trading engine, Lighter is transforming itself into a more comprehensive blockchain and DeFi platform with this upgrade.

The Lighter EVM will support running DeFi applications such as Uniswap and Aave on the platform, enabling deep integration of trading, lending, and shared liquidity pools, improving overall efficiency and reducing the risks of incentive-driven "mining." Lighter also stated that it is researching methods to further reduce latency and even exploring "synchronous execution" models to support more complex DeFi use cases.

Wu Blockchain

Crypto Newbie

01-31 11:52

Lighter has launched the Lighter EVM, which plans to introduce Ethereum Virtual Machine (EVM) support, enabling developers to deploy general-purpose smart contracts directly on its platform. Initially positioned as a high-performance trading engine, Lighter is transforming itself into a more comprehensive blockchain and DeFi platform with this upgrade.

The Lighter EVM will support running DeFi applications such as Uniswap and Aave on the platform, enabling deep integration of trading, lending, and shared liquidity pools, improving overall efficiency and reducing the risks of incentive-driven "mining." Lighter also stated that it is researching methods to further reduce latency and even exploring "synchronous execution" models to support more complex DeFi use cases.