After reading Messari's research report on @TalusNetwork, the core logic can be summarized in one sentence:

Reject black boxes, making every thought and action of AI verifiable, verifiable, and accountable.

Currently, the biggest problem with AI agents is their lack of transparency.

Permissions are granted, but the logic runs in a black box server, ultimately outputting a result.

As for whether there are any illusions in between?

Whether there are any violations? Nobody knows.

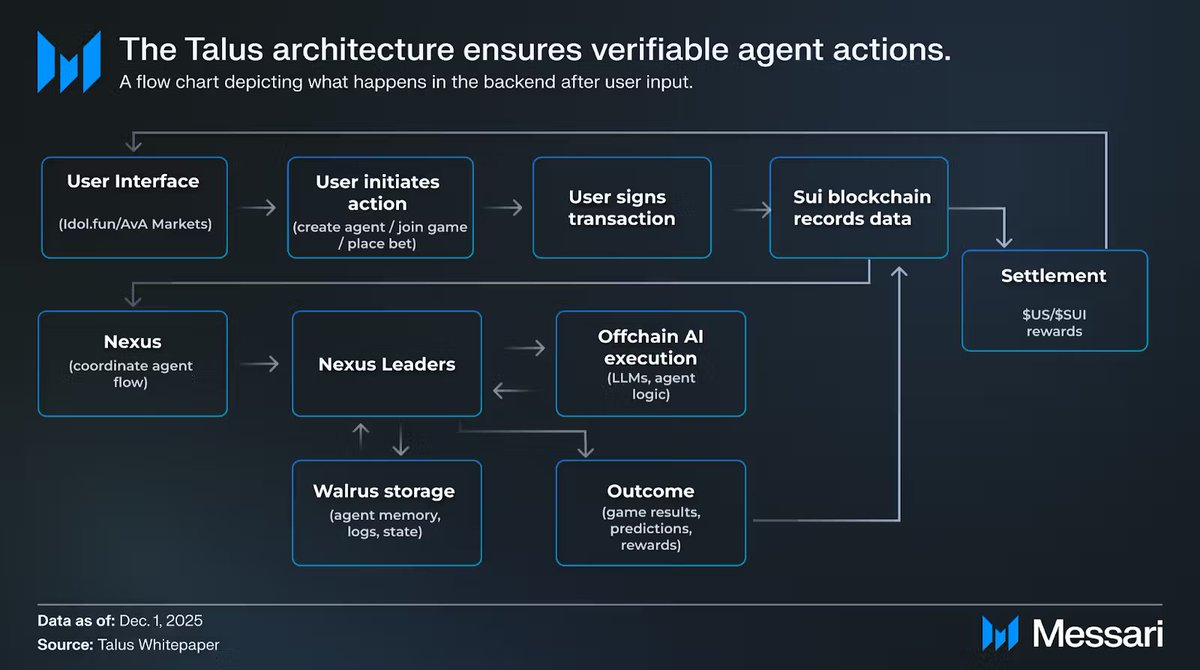

🌟 Talus's technical architecture is designed to make this black box transparent:

🔹 Nexus: Responsible for coordinating tasks and scheduling resources.

🔹 Leaders: Execute off-chain inference but must submit verifiable results.

🔹 Walrus: Stores the Agent's state and history, ensuring the storage is immutable.

🔹 Sui: Records the final action and is responsible for settlement, confirming rights on the blockchain.

This series of actions ensures that every decision-making step of the AI is traceable.

This is akin to installing a 24/7 dashcam on an AI agent, transforming it from an unverifiable black box into an on-chain entity capable of bearing legal and economic responsibility.

▂﹍▂﹍▂﹍▂﹍▂﹍▂

Conclusion✍️

Talus's ambition lies not in improving model intelligence, but in building the infrastructure for AI accountability.

Only when the execution process of AI is as transparent as a smart contract can it truly enter DeFi, DAO, and other economic activities involving real money.

This is the inevitable path for AI agents to transform from toys into economic participants.

@KaitoAI #Yap #Defi #Talus

@Talus_Labs @TalusNetwork