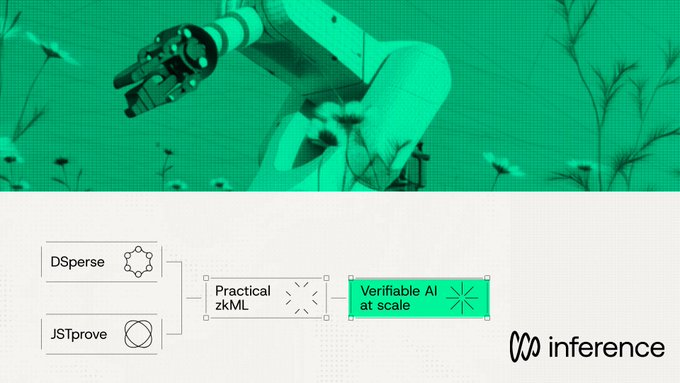

In the wave of AI + Web3, what truly matters isn't who shouts the loudest, but who solves the underlying problems. Inference Labs (@inference_labs) belongs to the latter. Their goal is very direct: to make AI inference no longer reliant on centralized black boxes, but rather a "verifiable, trustworthy, and composable" open infrastructure. The combination of Proof of Inference + zkML allows models to maintain intellectual property security while providing verifiable results on-chain, a qualitative leap for any scenario requiring trusted output.

Inference Labs (@inference_labs) positions itself as the "trust layer for decentralized AI." This means that in the future, whether it's DeFi's automated risk systems, GameFi's dynamic difficulty generation, RWA's risk control models, or even enterprise-level compliance applications, AI can run using the same verifiable inference pipeline without worrying about tampering, black boxes, or privacy leaks.

Their funding background also demonstrates institutional confidence in this field: DAMAM, Delphi, Mechanism, and others are all institutions betting on long-term architecture rather than short-term hype. If Inference Labs (@inference_labs) can truly establish this pathway, then AI will no longer be just about calling APIs, but will become a fundamental capability of the Web3 ecosystem. This is a direction worth planning for in advance.

#KaitoYap @KaitoAI #Yap