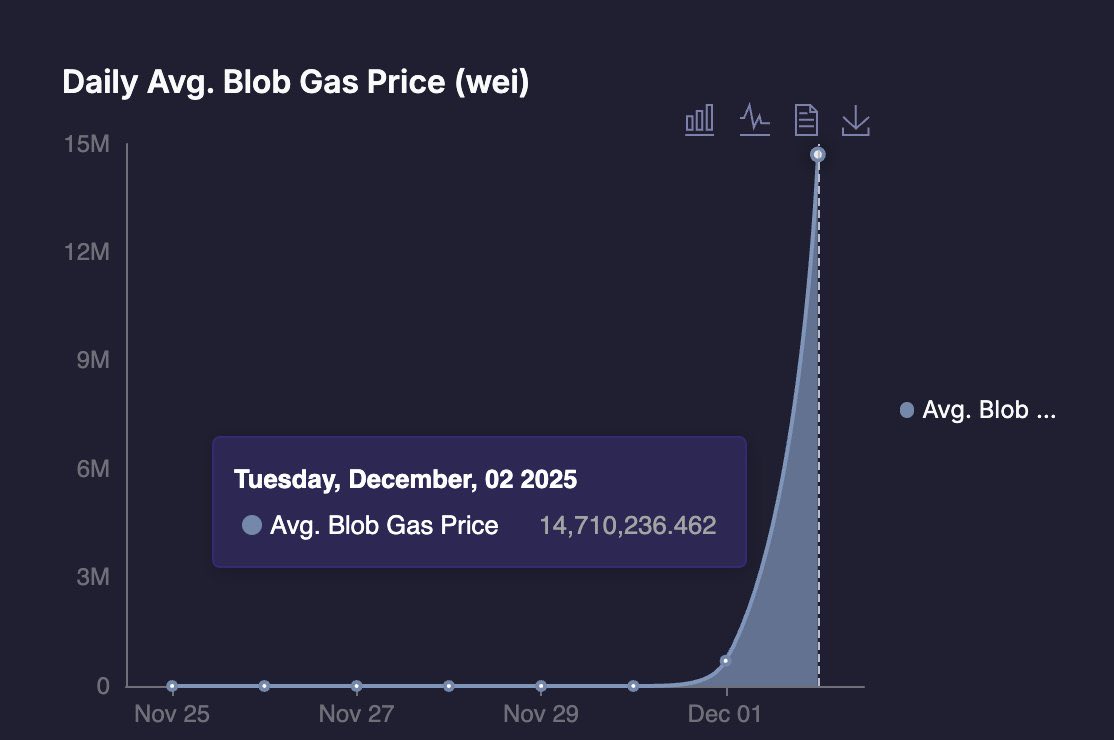

Why did the Ethereum Fusaka upgrade cause the blob base fee to jump from 1 wei to around 14-16 million wei within 24 hours, peaking at nearly 20 million wei, with an average of about 14.7 million wei—a surge of approximately 15 million times?

Why such a dramatic increase?

Because the original blob gas fee starting point was extremely low, practically free. The fee jumped from 0.000000001 Gwei (1 wei, incredibly low) to an average of around 14.7 million wei, an increase of nearly 15 million times. See the chart below; the fees skyrocketed after Fusaka's launch.

To put it simply, the Ethereum mainnet's L1 layer is the central processing unit, and processing transactions directly is relatively expensive. Therefore, L2 layer systems, acting as auxiliary "brains," were developed, processing transactions much more cheaply. However, for security reasons, after these deputies complete their processing, they need to submit the data (transaction records) to L1. This data is stored on blobs (large blocks of temporary storage that don't occupy mainnet space but are briefly loaded into memory for KZG verification; they can be pruned after expiration).

Blob gas fees are essentially the "data availability fees" that L2 pays to L1. During the Dencun upgrade, this fee lacked a minimum price mechanism, causing it to be stuck at 1 wei, practically free. As a result, the network was subsidizing blobs, and nodes were operating at a loss (providing KZG verification computing power free of charge).

This Fusaka upgrade has an EIP called 7918. This adds a "reserve price" to the blob fee; this time, it cannot be lower than 1/16 of the L1 execution fee, technically called BLOB_BASE_COST / GAS_PER_BLOB.

In other words, previously, blob fees could be as low as 1 wei, practically free; now, blob fees are ≥ (L1 execution base fee) / 16 (more precisely, 1/15.258). When execution costs increase, blob fees also rise; even when costs are low, there's a guaranteed minimum fee, historically around 0.01~0.5 Gwei, 10 million to 500 million times higher than the previous 0.000000001 Gwei. Shortly after Fusaka went live, the actual average reached 14.7 million wei, hence the 15 million-fold increase.

The reason for this design is that blobs not only consume storage but also require computation during node verification (KZG proofs are 15 times more expensive than single-point computation). Previously, L2 was free; now, a "reasonable rent" is paid—at least covering part of the actual cost.

This is good for the fee market, allowing prices to fluctuate normally. The network can use "price increases" to control blob traffic, avoiding congestion or waste. Furthermore, Fusaka also includes PeerDAS (data sampling), allowing nodes to verify large blobs using only small samples, potentially multiplying capacity several times over.

This is also good news for ETH burning, as it presents an opportunity to burn even more ETH. Based on historical data backtesting simulations (assuming launch in June 2025), Blockworks estimates that approximately eight times more ETH could be burned. Bitwise analysis suggests that with the increase in L2 transaction volume in 2026, blobs could burn an additional 200,000 to 400,000 ETH within a year, contributing 30-50% of the total burned ETH. Of course, this appears to be an optimistic estimate, and the actual figure will depend on the growth of L2 transaction volume.