Claude Skills was only recently popular, and yesterday DeepSeek released a new paper using Engram to tell the market: "You might be on the wrong track?" AI LLM is truly a daily battle of titans! 😱

A simple comparison reveals the difference: Anthropic provides its model with a super secretary, helping it organize 200 documents and remember all conversations; DeepSeek is even more radical, performing brain surgery on its model, giving it a "memory organ" that allows for O(1) second responses, like looking up a dictionary, without needing to activate layers of neural networks.

This problem should have been solved long ago.

From the Transform architecture onward, large models inherently process knowledge like a rote learner. Every time you ask "Who was Princess Diana?", they have to go through their 175B-parameter brain from beginning to end. How much computing power is wasted?

It's like having to memorize the entire Oxford Dictionary from A to Z every time you want to look up a word—how absurd! Even with the currently popular MoE architecture, recalling a single obscure piece of knowledge still requires a large number of expensive computing experts.

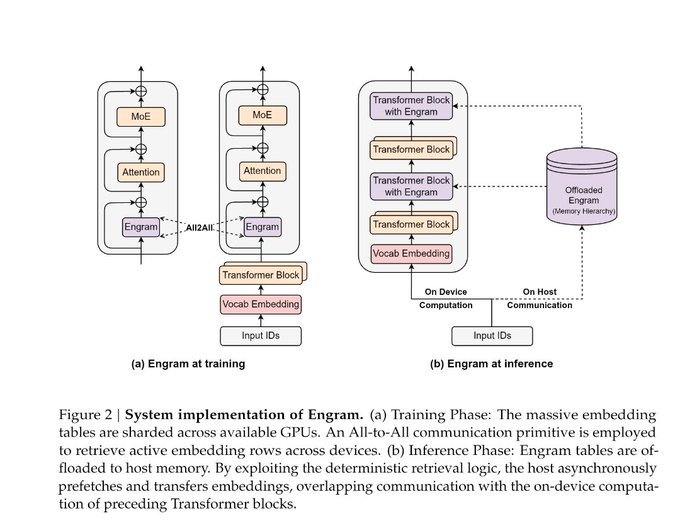

1) Engram's core breakthrough: Giving the model a "memory organ"

Engram's work is simple: it extracts static factual knowledge from "parameter memory," puts it into a scalable hash table, and achieves O(1) constant-time lookup through N-gram partitioning and multi-head hash mapping.

In layman's terms, managing the context system still requires the AI to hold a manual and consult it when encountering a problem. Engram aims to create a new organ in the brain specifically for instantly "recalling" fixed common-sense knowledge without requiring any further reasoning.

How powerful is the effect? A 27-parameter model improves performance on knowledge tasks (MMLU) by 3.4%, and long text retrieval jumps from 84% to 97%. Crucially, these memory parameters can be offloaded to inexpensive DDR memory or even hard drives, making the inference cost almost zero.

2) Is this revolutionizing the RAG and GPU arms race? If Engram truly succeeds, the first to be impacted won't be OpenAI, but rather the RAG (Retrieval Augmentation) methodology and NVIDIA's GPU memory business, especially public knowledge base RAG.

This is because RAG essentially involves the model "searching" external databases, but this is slow, poorly integrated, and requires maintaining a vector library. Engram directly embeds the memory module into the model architecture, resulting in fast and accurate searches, and it can also filter out hash collisions using context gating.

Moreover, the discovery of the "U-shaped scaling law" mentioned in the paper is very exciting. If the model allocates 20-25% of its parameters to Engram as a "memory hard drive," leaving the remaining 75-80% for the traditional neural network as the "inference brain," and for every tenfold increase in memory size, performance can improve logarithmically.

Doesn't this completely shatter the belief that "larger parameters = smarter," transforming the arms race of "infinitely stacking H100" into an efficiency game of "moderate computing power + massive cheap memory"?

That's all.

It's unclear whether DeepSeek V4, expected to be released around the Spring Festival, will unleash the full power of its combination of Enggram and the previous mHC.

This paradigm shift from "computing power is king" to a dual-engine approach of "computing power + memory" is likely to trigger another fierce battle. It remains to be seen how giants like OpenAI and Anthropic, who control computing resources, will respond.