I invested 200u in ZAMA @zama NFT, and it can sell for $1600! That's an 8x return, not bad at all! 🪂

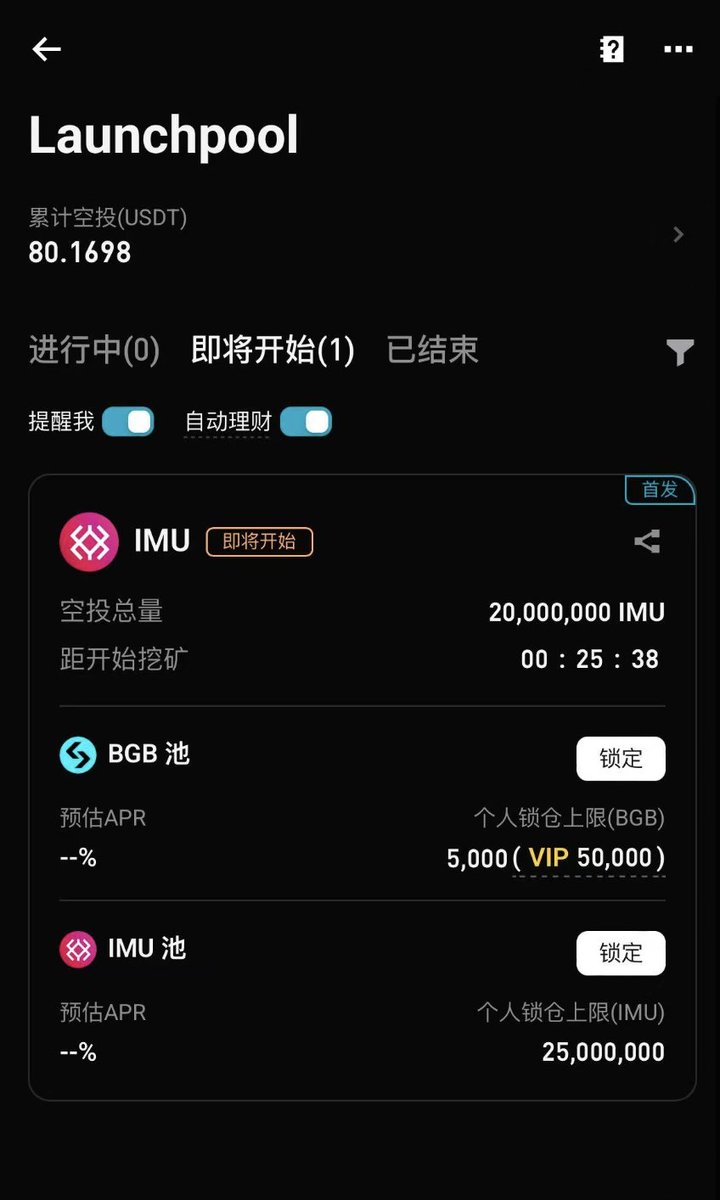

As for publicly participating in the initial public offering (IPO)? It seems my cost was 0.05, so I probably lost a little money? 🤣

Price Converter

- Crypto

- Fiat

USDUnited States Dollar

CNYChinese Yuan

JPYJapanese Yen

HKDHong Kong Dollar

THBThai Baht

GBPBritish Pound

EUREuro

AUDAustralian Dollar

TWDNew Taiwan Dollar

KRWSouth Korean Won

PHPPhilippine Peso

AEDUAE Dirham

CADCanadian Dollar

MYRMalaysian Ringgit

MOPMacanese Pataca

NZDNew Zealand Dollar

CHFSwiss Franc

CZKCzech Koruna

DKKDanish Krone

IDRIndonesian Rupiah

LKRSri Lankan Rupee

NOKNorwegian Krone

QARQatari Riyal

RUBRussian Ruble

SGDSingapore Dollar

SEKSwedish Krona

VNDVietnamese Dong

ZARSouth African Rand

No more data