🫡 @Mira_Network wants to break the deadlock by taking a different approach. Instead of letting AI make its own decisions, it's better to build a system that sits "above" AI and specifically verifies its claims. Everyone's talking about how powerful AI is, but its real-world applications are actually quite limited.

Ultimately, AI's biggest problem right now is its unreliability. It can produce seemingly logical content, but upon closer inspection, you often find it's laced with falsehoods. This problem is fine in chatbots, but it becomes completely untenable when applied to high-stakes scenarios like healthcare, law, and finance.

Why does AI suffer from this? A core reason is that it consistently struggles to strike a balance between accuracy and stability. To achieve more stable output, you need cleaner training data, which easily introduces bias. Conversely, to make it more realistic and comprehensive, you need to incorporate a lot of conflicting information, making the model less reliable and prone to hallucinations.

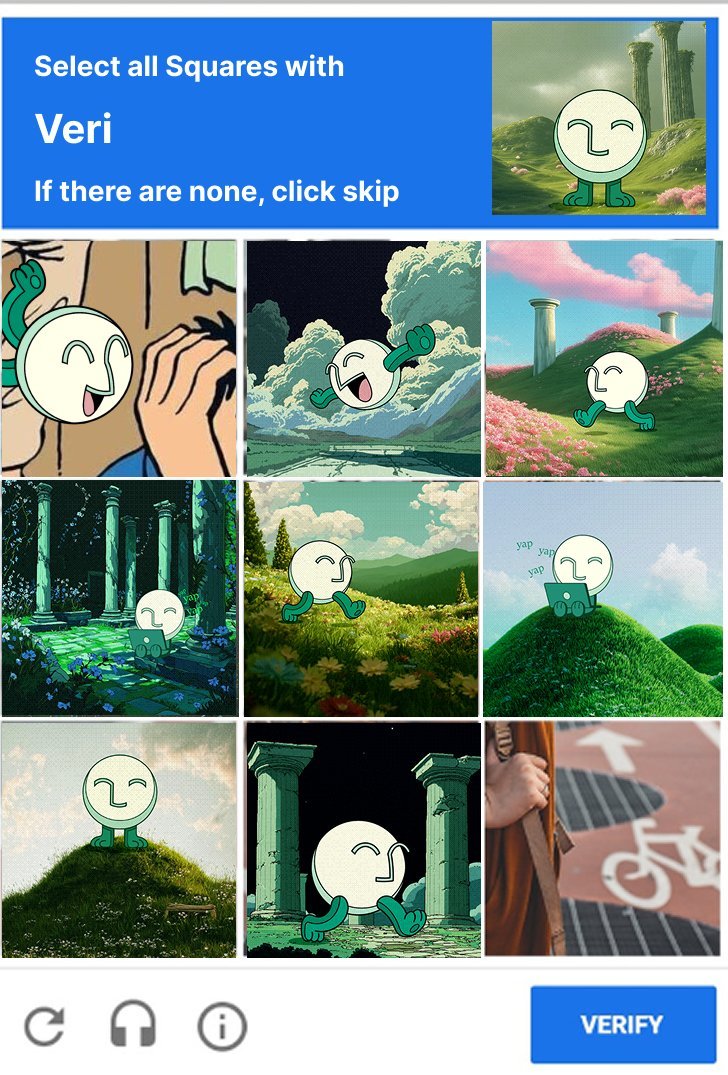

This approach is a bit like giving AI a "fact checker." The AI first outputs content, which Mira then breaks down into smaller judgments. These are then verified by multiple independent models to determine if the conclusions are reliable. Once these models reach consensus, a verification report is generated by on-chain nodes, effectively marking the result as "verified."

This approach is interesting because it doesn't modify the AI's "brain," but rather provides it with a "supervision system." If this system truly works, we might be able to confidently empower AI to perform more complex and risky tasks, such as automated contract writing, code review, and even independent decision-making. This is true AI infrastructure.

Key to this is its presence on the @KaitoAI leaderboard. Recently, @arbitrum @Aptos @0xPolygon @shoutdotfun $ENERGY has also been trending.